SPINN: Synergistic Progressive Inference of Neural Networks over device and cloud

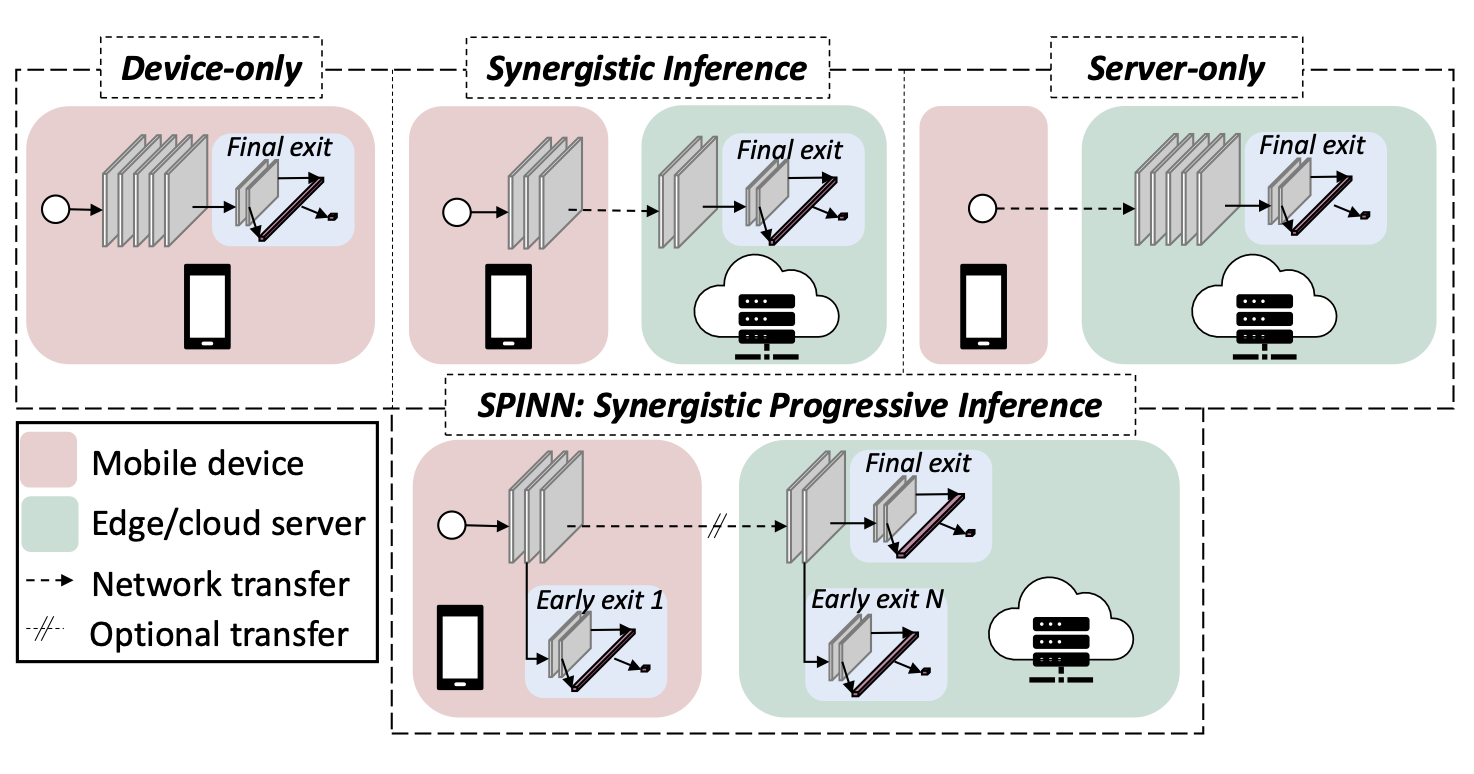

Despite the soaring use of convolutional neural networks (CNNs) in mobile applications, uniformly sustaining high-performance inference on mobile has been elusive due to the excessive computational demands of modern CNNs and the increasing diversity of deployed devices. A popular alternative comprises offloading CNN processing to powerful cloud-based servers. Nevertheless, by relying on the cloud to produce outputs, emerging mission-critical and high-mobility applications, such as drone obstacle avoidance or interactive applications, can suffer from the dynamic connectivity conditions and the uncertain availability of the cloud. In this paper, we propose SPINN, a distributed inference system that employs synergistic device-cloud computation together with a progressive inference method to deliver fast and robust CNN inference across diverse settings. The proposed system introduces a novel scheduler that co-optimises the early-exit policy and the CNN splitting at run time, in order to adapt to dynamic conditions and meet user-defined service-level requirements. Quantitative evaluation illustrates that SPINN outperforms its state-of-the-art collaborative inference counterparts by up to 2× in achieved throughput under varying network conditions, reduces the server cost by up to 6.8× and improves accuracy by 20.7% under latency constraints, while providing robust operation under uncertain connectivity conditions and significant energy savings compared to cloud-centric execution.

Authors: S. Laskaridis*, S. I. Venieris*, M. Almeida*, I. Leontiadis, N. D. Lane

Published at: International Conference on Mobile Computing and Networking (MobiCom’20)_

Overview

In SPINN, we combined progressive inference with collaborative device–cloud execution. Our system dynamically chooses both where to split computation and when to exit early, adapting to changing network conditions and workload demands.

SPINN improves throughput, reduces server costs, and delivers higher accuracy under latency constraints compared to existing collaborative inference systems, while also offering energy savings on the device.

Links

Reference

@inproceedings{laskaridis2020spinn,

title={SPINN: Synergistic progressive inference of neural networks over device and cloud},

author={Laskaridis, Stefanos and Venieris, Stylianos I and Almeida, Mario and Leontiadis, Ilias and Lane, Nicholas D},

booktitle={Proceedings of the 26th annual international conference on mobile computing and networking},

pages={1--15},

year={2020}

}