Recurrent Early Exits for Federated Learning with Heterogeneous Clients

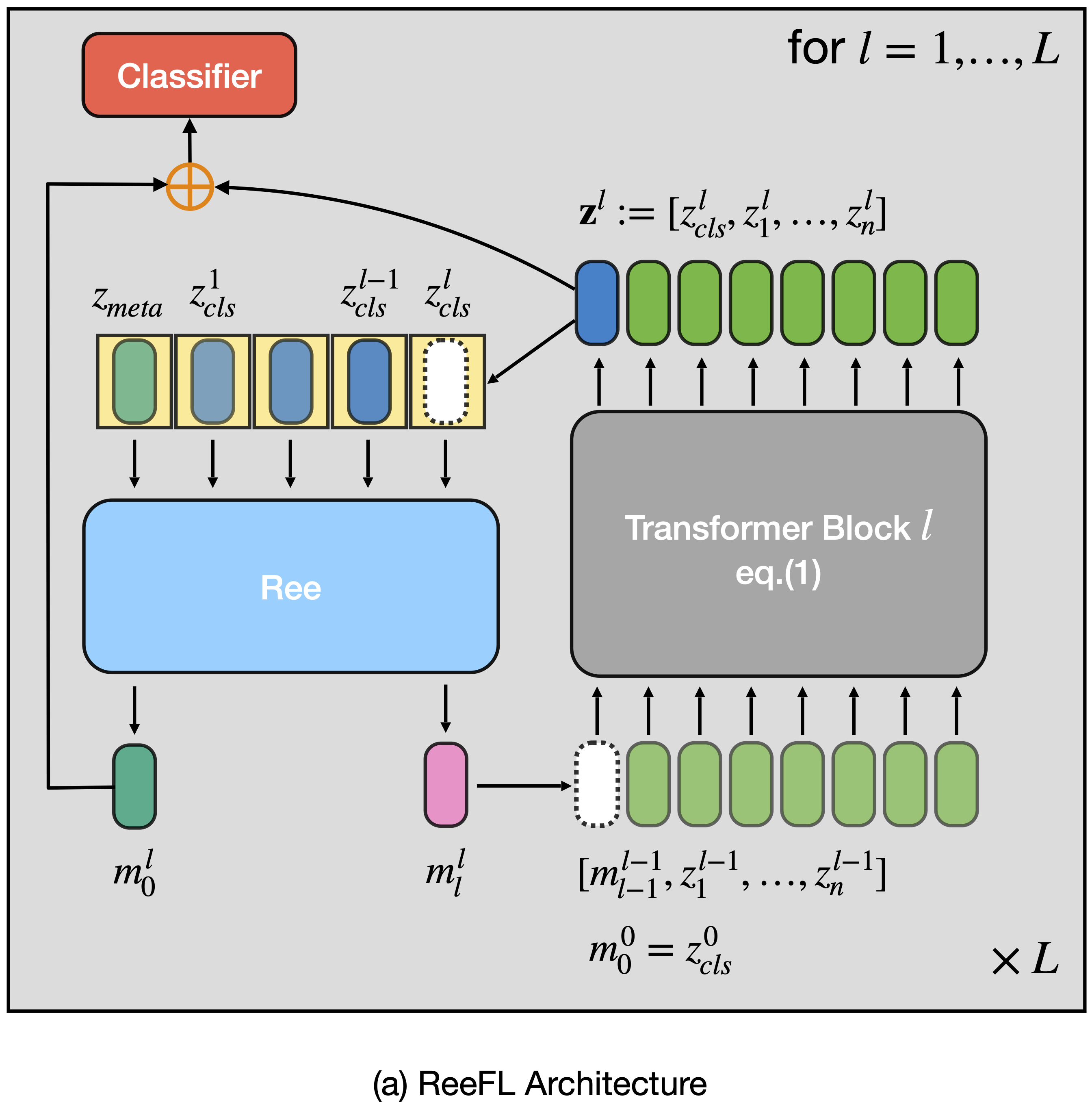

Federated learning (FL) has enabled distributed learning of a model across multiple clients in a privacy-preserving manner. One of the main challenges of FL is to accommodate clients with varying hardware capacities; clients have differing compute and memory requirements. To tackle this challenge, recent state-of-the-art approaches leverage the use of early exits. Nonetheless, these approaches fall short of mitigating the challenges of joint learning multiple exit classifiers, often relying on hand-picked heuristic solutions for knowledge distillation among classifiers and/or utilizing additional layers for weaker classifiers. In this work, instead of utilizing multiple classifiers, we propose a recurrent early exit approach named ReeFL that fuses features from different sub-models into a single shared classifier. Specifically, we use a transformer-based early-exit module shared among sub-models to i) better exploit multi-layer feature representations for task-specific prediction and ii) modulate the feature representation of the backbone model for subsequent predictions. We additionally present a per-client self-distillation approach where the best sub-model is automatically selected as the teacher of the other sub-models at each client. Our experiments on standard image and speech classification benchmarks across various emerging federated fine-tuning baselines demonstrate ReeFL’s effectiveness over previous works.

Authors: Royson Lee, Javier Fernandez-Marques, Shell Xu Hu, Da Li, Stefanos Laskaridis, Łukasz Dudziak, Timothy Hospedales, Ferenc Huszár, Nicholas D. Lane

Published at: International Conference on Machine Learning (ICML’24)

Overview

We introduced ReeFL, a new way to handle system heterogeneity in federated learning using recurrent early exits. Instead of training multiple independent exit classifiers or relying on brittle heuristics, we designed a shared transformer-based early-exit module that aggregates information across layers and feeds it back into subsequent predictions.

To further adapt to client heterogeneity, we incorporated per-client self-distillation, automatically selecting the strongest available sub-model as a teacher. Across image and speech federated benchmarks, ReeFL improves performance and flexibility compared to prior early-exit approaches, while simplifying training and deployment in heterogeneous federated environments.

Links

Reference

@inproceedings{lee2024reefl_openreview,

title = {Recurrent Early Exits for Federated Learning with Heterogeneous Clients},

author = {Lee, Royson and Fernandez-Marques, Javier and Hu, Shell Xu and Li, Da and Laskaridis, Stefanos and Dudziak, {\L}ukasz and Hospedales, Timothy and Husz{\'a}r, Ferenc and Lane, Nicholas D.},

booktitle = {International Conference on Machine Learning (ICML)},

year = {2024},

url = {https://openreview.net/forum?id=w4B42sxNq3}

}