MELTing Point: Mobile Evaluation of Language Transformers

Transformers have revolutionized the machine learning landscape, gradually making their way into everyday tasks and equipping our computers with “sparks of intelligence”. However, their runtime requirements have prevented them from being broadly deployed on mobile. As personal devices become increasingly powerful and prompt privacy becomes an ever more pressing issue, we explore the current state of mobile execution of Large Language Models (LLMs). To achieve this, we have created our own automation infrastructure, MELT, which supports the headless execution and benchmarking of LLMs on device, supporting different models, devices and frameworks, including Android, iOS and Nvidia Jetson devices. We evaluate popular instruction fine-tuned LLMs and leverage different frameworks to measure their end-to-end and granular performance, tracing their memory and energy requirements along the way. Our analysis is the first systematic study of on-device LLM execution, quantifying performance, energy efficiency and accuracy across various state-of-the-art models and showcases the state of on-device intelligence in the era of hyperscale models. Results highlight the performance heterogeneity across targets and corroborates that LLM inference is largely memory-bound. Quantization drastically reduces memory requirements and renders execution viable, but at a non-negligible accuracy cost. Drawing from its energy footprint and thermal behavior, the continuous execution of LLMs remains elusive, as both factors negatively affect user experience. Last, our experience shows that the ecosystem is still in its infancy, and algorithmic as well as hardware breakthroughs can significantly shift the execution cost. We expect NPU acceleration, and framework-hardware co-design to be the biggest bet towards efficient standalone execution, with the alternative of offloading tailored towards edge deployments.

Authors: Stefanos Laskaridis, Kleomenis Katevas, Lorenzo Minto, Hamed Haddadi

Published at: International Conference on Mobile Computing and Networking(MobiCom’24) and Efficient Systems for Foundation Models (ES-FoMo @ ICML’24)

Overview

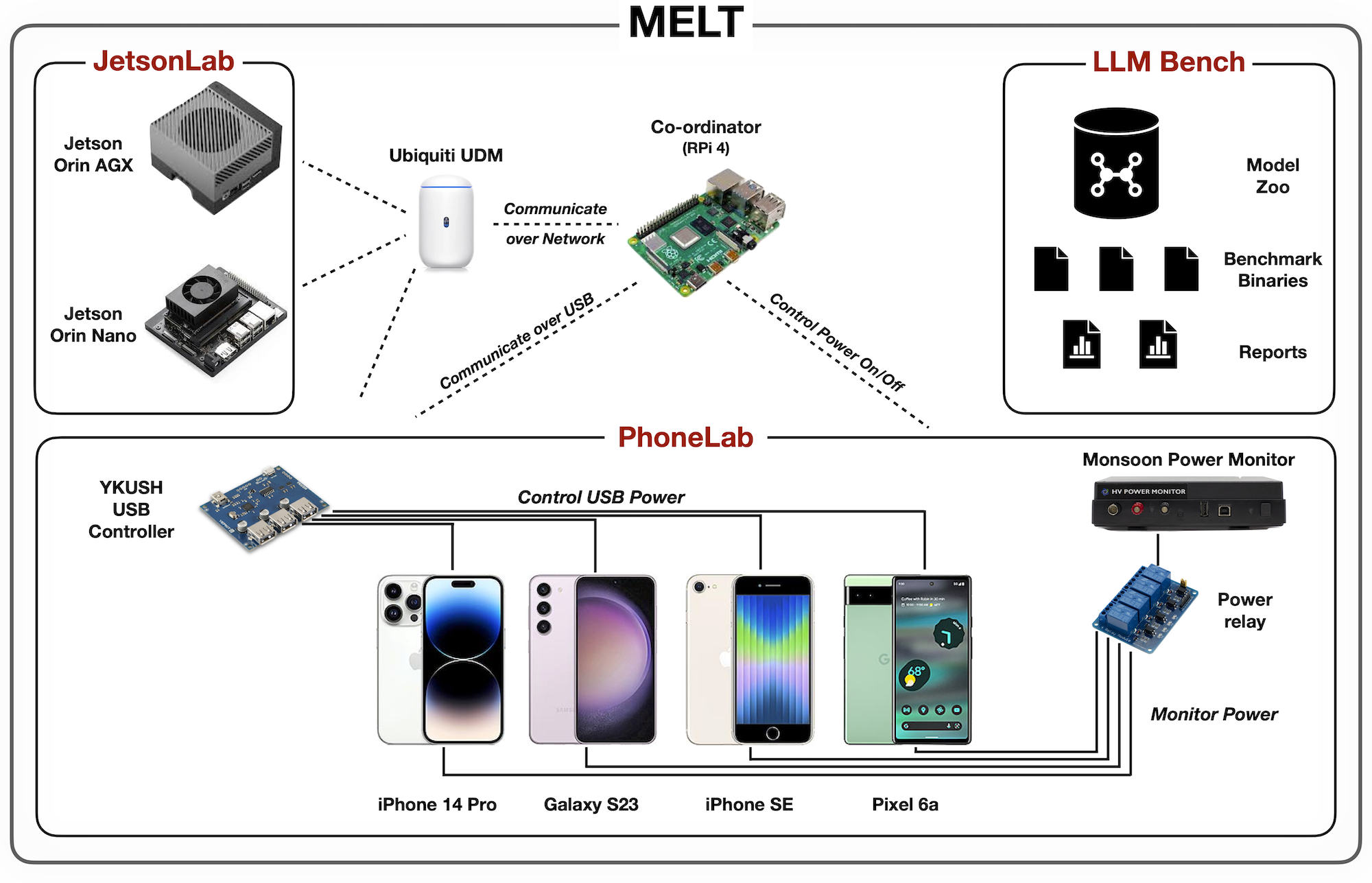

We built MELT, a system for evaluating instruction-tuned language models running entirely on device. Our goal was to move beyond proxy benchmarks and understand what really limits mobile LLMs in practice. MELT lets us run models headlessly on Android, iOS, and embedded platforms like Jetson, while collecting fine-grained measurements of latency, memory usage, and energy consumption across frameworks.

Using MELT, we showed that mobile LLM inference is fundamentally memory-bound, with large performance variability across devices and runtimes. Quantization makes on-device execution feasible, but often at a noticeable accuracy cost, and continuous inference remains constrained by energy and thermal limits. Our results highlight why careful system-level evaluation is critical before deploying LLMs on mobile hardware.

Links

Reference

@inproceedings{laskaridis2024melting,

title={Melting point: Mobile evaluation of language transformers},

author={Laskaridis, Stefanos and Katevas, Kleomenis and Minto, Lorenzo and Haddadi, Hamed},

booktitle={Proceedings of the 30th Annual International Conference on Mobile Computing and Networking},

pages={890--907},

year={2024}

}