NoEsis: A Modular LLM with Differentially Private Knowledge Transfer

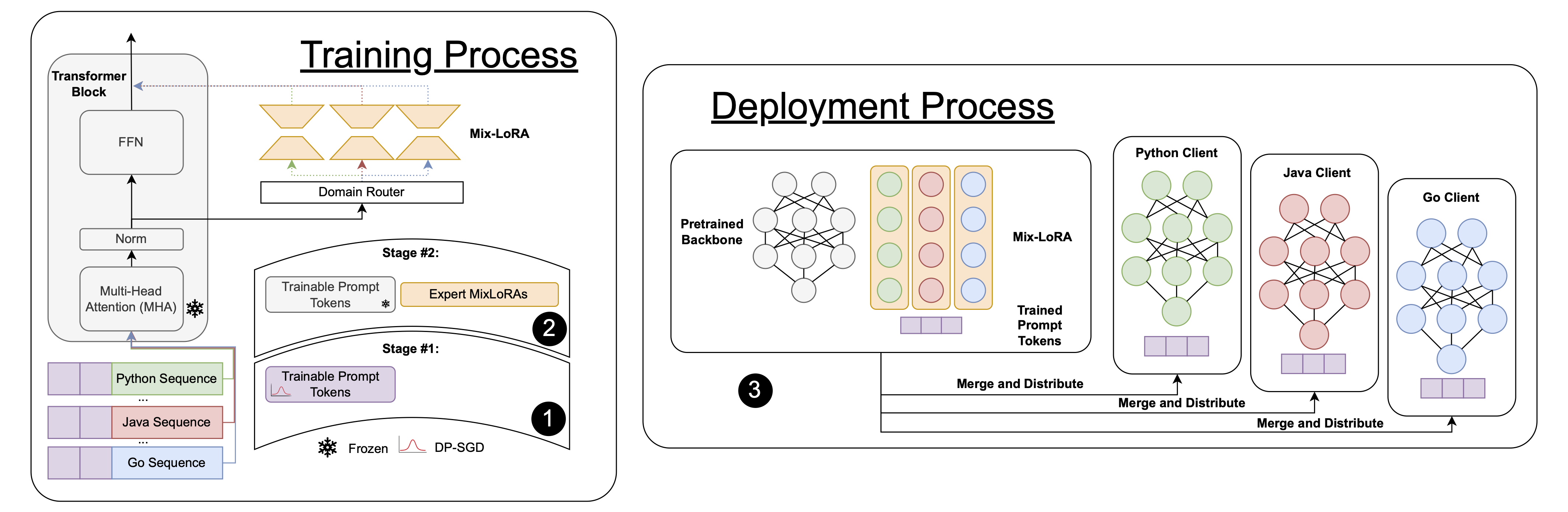

Large Language Models (LLM) are typically trained on vast amounts of data, springing from various sources. Even when designed modularly (e.g., Mixture-of-Experts), LLMs can leak privacy on their sources. Conversely, training such models in isolation arguably prohibits generalization. To this end, we propose a framework, NoEsis, which builds upon the desired properties of modularity, privacy, and knowledge transfer. NoEsis integrates differential privacy with a hybrid two-staged parameter-efficient fine-tuning that combines domain-specific low-rank adapters, acting as experts, with common prompt tokens, acting as a knowledge-sharing backbone. Results from our evaluation on CodeXGLUE showcase that NoEsis can achieve provable privacy guarantees with tangible knowledge transfer across domains, and empirically show protection against Membership Inference Attacks. Finally, on code completion tasks, NoEsis bridges at least 77% of the accuracy gap between the non-shared and the non-private baseline.

Authors: Rob Romijnders, Stefanos Laskaridis, Ali Shahin Shamsabadi, Hamed Haddadi

Published at: Workshop on Modularity for Collaborative, Decentralized, and Continual Deep Learning (ICLR’25)

Overview

We propose NoEsis, a privacy-preserving framework for adapting modular and Mixture-of-Experts (MoE) large language models while enabling controlled cross-domain knowledge transfer. NoEsis combines domain-specific low-rank adapters with a small set of shared prompt tokens, trained under differential privacy, to limit information leakage without fully isolating experts. Across code-completion benchmarks, this approach recovers most of the utility lost by private training while significantly reducing susceptibility to membership inference attacks.

Links

Reference

@inproceedings{

romijnders2025noesis,

title={NoEsis: A Modular {LLM} with Differentially Private Knowledge Transfer},

author={Rob Romijnders and Stefanos Laskaridis and Ali Shahin Shamsabadi and Hamed Haddadi},

booktitle={ICLR 2025 Workshop on Modularity for Collaborative, Decentralized, and Continual Deep Learning},

year={2025},

url={https://openreview.net/forum?id=UIzvc5u2Eu}

}